Natural language processing (NLP) tools are revolutionizing how we interact with computers. From understanding human language to generating human-like text, these tools leverage sophisticated algorithms to unlock the power of textual data. This guide explores the evolution of NLP, key techniques, popular toolkits, and diverse applications across various industries, providing a comprehensive overview of this rapidly advancing field.

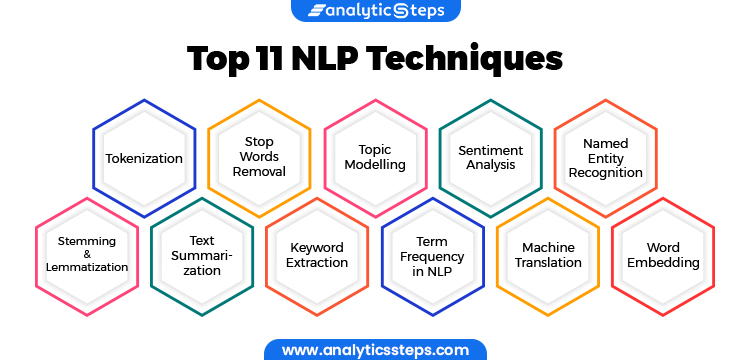

We’ll delve into the core concepts underpinning NLP, such as tokenization, stemming, and sentiment analysis, examining both traditional methods and the latest advancements in deep learning. We’ll also discuss the ethical considerations and potential challenges associated with NLP, ensuring a balanced and informative exploration of this transformative technology.

Core NLP Techniques: Natural Language Processing (NLP) Tools

Natural Language Processing (NLP) relies on a suite of fundamental techniques to understand and interpret human language. These techniques break down complex text into manageable components, allowing computers to analyze meaning, context, and relationships within the data. This section will explore some core algorithms and their applications.

Tokenization, Natural language processing (NLP) tools

Tokenization is the process of breaking down text into individual units, called tokens. These tokens can be words, punctuation marks, or even sub-word units. Different tokenization methods exist, each with its strengths and weaknesses. The choice of method often depends on the specific NLP task and the characteristics of the text data. Below is a comparison of some common methods:

| Tokenization Method | Description | Example (Input: “Hello, world!”) |

|---|---|---|

| Whitespace Tokenization | Splits text based on whitespace characters (spaces, tabs, newlines). Simple but can struggle with punctuation. | [“Hello,”, “world!”] |

| Punctuation Tokenization | Splits text based on whitespace and punctuation marks. Handles punctuation more effectively. | [“Hello”, “,”, “world”, “!”] |

| Regular Expression Tokenization | Uses regular expressions to define patterns for token boundaries. Offers greater flexibility and control. | [“Hello”, “,”, “world”, “!”] or potentially more complex tokenization depending on the regex. |

| Subword Tokenization (e.g., Byte Pair Encoding – BPE) | Splits words into subword units, particularly useful for handling rare words and out-of-vocabulary terms in languages with rich morphology. | [“Hell”, “o”, “,”, “world”, “!”] (example, actual output depends on the BPE model training) |

Stemming and Lemmatization

Stemming and lemmatization are techniques used to reduce words to their root forms. Stemming is a simpler, rule-based approach that chops off word endings, often resulting in non-dictionary words. Lemmatization, on the other hand, is a more sophisticated process that considers the context and uses a vocabulary (lexicon) to map words to their dictionary forms (lemmas). For example, stemming might reduce “running” to “runn,” while lemmatization would correctly identify the lemma as “run.”

Part-of-Speech Tagging

Part-of-speech (POS) tagging involves assigning grammatical tags to each word in a sentence, indicating its role (e.g., noun, verb, adjective, adverb). This provides crucial grammatical information, improving the accuracy of subsequent NLP tasks like parsing and semantic analysis. For example, the sentence “The quick brown fox jumps over the lazy dog” might be tagged with parts of speech like: “The/DET quick/ADJ brown/ADJ fox/NOUN jumps/VERB over/ADP the/DET lazy/ADJ dog/NOUN.”

Named Entity Recognition (NER)

Named Entity Recognition (NER) identifies and classifies named entities in text, such as people, organizations, locations, dates, and monetary values. Rule-based approaches might use gazetteers (lists of known entities) and patterns, while machine learning methods, often using Hidden Markov Models (HMMs) or Conditional Random Fields (CRFs), can learn from labeled data to achieve higher accuracy. For instance, in the sentence “Barack Obama was president of the United States,” an NER system would identify “Barack Obama” as a person and “United States” as a location.

Relationship Extraction

Relationship extraction aims to identify semantic relationships between entities mentioned in text. This could involve determining relationships like “X works for Y,” “X is located in Y,” or “X is married to Y.” Techniques include dependency parsing, which analyzes the grammatical relationships between words, and machine learning models trained on annotated data to predict relationship types. For example, from the sentence “Steve Jobs founded Apple,” a relationship extraction system would identify the “founded” relationship between Steve Jobs and Apple.

Natural language processing (NLP) tools are no longer a futuristic concept; they are actively shaping our world. From improving customer service through chatbots to enabling breakthroughs in medical research, the applications are vast and ever-expanding. Understanding the capabilities and limitations of NLP is crucial for harnessing its potential responsibly and ethically. As research continues to advance, we can expect even more innovative and impactful applications of NLP in the years to come.

Natural language processing (NLP) tools are rapidly evolving, offering businesses powerful ways to analyze and understand text data. The scalability and cost-effectiveness offered by leveraging these tools are significantly enhanced when integrated with robust cloud infrastructure, such as those described in this helpful guide on Cloud computing for businesses. Ultimately, the cloud’s capacity allows NLP tools to process larger datasets and deliver faster, more insightful results for a wide range of applications.

Natural language processing (NLP) tools are increasingly crucial for businesses handling large volumes of textual data. Efficient management of this data often necessitates robust infrastructure, which is where considerations of Multi-cloud strategies become vital for scalability and resilience. A well-planned multi-cloud approach ensures NLP tools can access the resources needed for optimal performance and avoid single points of failure, ultimately improving the accuracy and efficiency of NLP tasks.