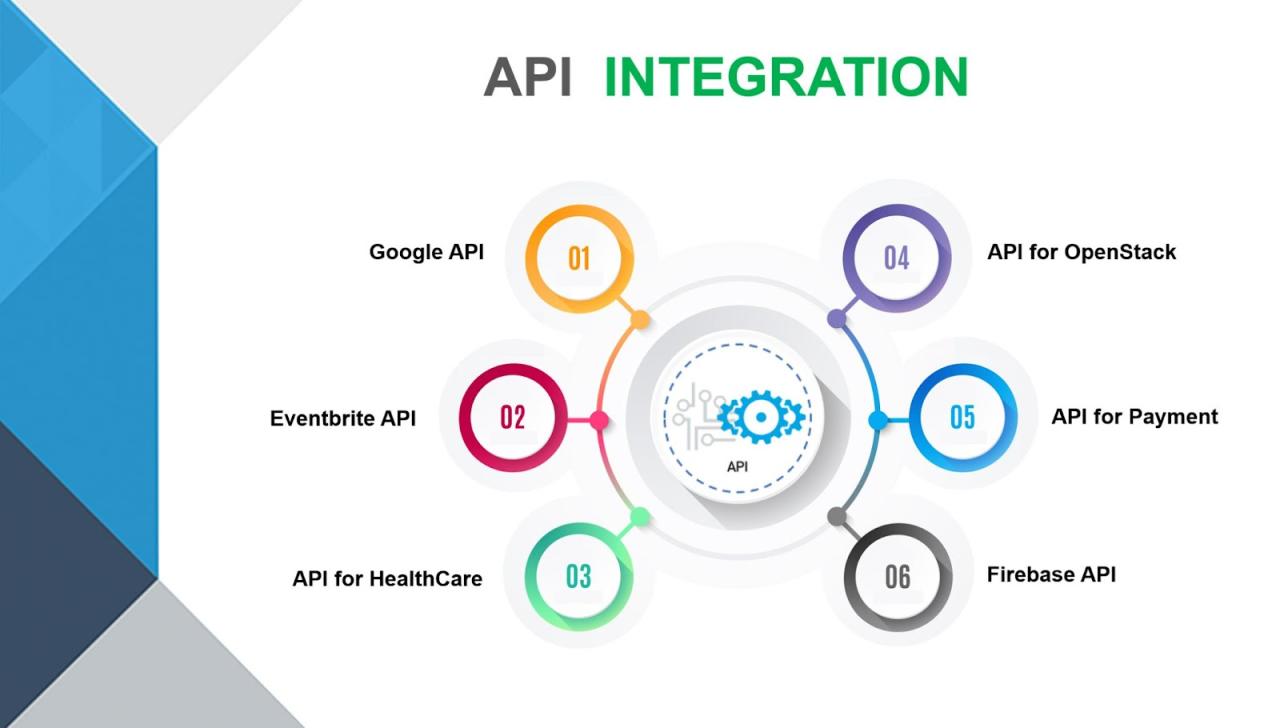

API integration for AI tools is revolutionizing how businesses leverage artificial intelligence. This powerful combination unlocks unprecedented scalability, efficiency, and cost savings. By seamlessly connecting AI applications to existing systems, companies can automate processes, gain valuable insights from data, and ultimately, enhance their products and services. This exploration delves into the practical aspects of API integration, from choosing the right API to implementing robust security measures.

We’ll examine the various API types (REST, GraphQL, etc.), common challenges like authentication and data security, and best practices for successful implementation. Through code examples and real-world case studies, we aim to equip readers with the knowledge and tools to effectively integrate AI into their workflows.

Benefits of API Integration for AI Tools

Integrating AI tools via APIs offers significant advantages for businesses, streamlining operations and boosting efficiency. This approach allows companies to leverage the power of artificial intelligence without the need for extensive in-house development or the complexities of managing individual AI systems. The benefits extend across various aspects of business, from development speed and cost to scalability and overall efficiency.

API integration enhances scalability and efficiency in AI deployments by providing a flexible and modular approach. Instead of building a monolithic AI system, businesses can integrate different AI tools through APIs, creating a customizable and adaptable solution. This modularity enables easy scaling of resources based on demand, preventing overspending on unused capacity during periods of low activity. Furthermore, APIs simplify the management and maintenance of AI systems, reducing operational overhead and improving overall efficiency.

Improved Development Speed and Cost Reduction

Utilizing pre-built AI tools via APIs drastically accelerates the development process. Developers can focus on integrating existing AI capabilities into their applications rather than building AI models from scratch. This significantly reduces development time and associated labor costs. For example, a company developing a customer service chatbot can integrate a pre-trained natural language processing (NLP) API instead of training its own NLP model, saving months of development time and the costs associated with data acquisition, model training, and testing. This faster development cycle allows businesses to quickly launch new features and products, gaining a competitive edge in the market. The cost savings extend beyond labor; the reduced development time translates to lower overall project costs. Companies can leverage the economies of scale achieved by the API providers, who invest heavily in infrastructure and maintenance, allowing businesses to benefit from sophisticated AI capabilities at a fraction of the cost of in-house development.

Enhanced Scalability and Efficiency

API integration provides a crucial mechanism for scaling AI deployments. As business needs grow, API-based solutions can easily accommodate increased workloads by simply adjusting the API usage. This dynamic scalability prevents bottlenecks and ensures consistent performance even during periods of high demand. Contrast this with the challenges of scaling a custom-built AI system, which often requires significant infrastructure investments and complex system upgrades. For example, an e-commerce company using an image recognition API for product categorization can seamlessly handle a surge in product uploads during peak seasons without significant performance degradation. The API provider handles the underlying infrastructure scaling, ensuring the company’s application remains responsive and efficient. Moreover, APIs often provide monitoring and logging features, offering valuable insights into AI performance and enabling proactive optimization. This allows for efficient resource allocation and continuous improvement of AI operations.

Choosing the Right API for AI Integration

Selecting the appropriate AI API is crucial for successful integration. The wrong choice can lead to performance bottlenecks, compatibility issues, and ultimately, project failure. This section explores key considerations and provides a framework for making an informed decision.

The landscape of AI API providers is diverse, offering a range of capabilities and pricing models. Understanding the strengths and weaknesses of different providers is essential for aligning your needs with the right solution.

Comparison of AI API Providers, API integration for AI tools

Several major players dominate the AI API market, each specializing in different areas. For example, Google Cloud AI Platform offers a comprehensive suite of tools covering natural language processing (NLP), computer vision, and machine learning (ML), while Amazon SageMaker provides a robust platform for building, training, and deploying custom ML models. OpenAI’s API focuses on powerful large language models, particularly suitable for tasks like text generation and chatbots. Each provider offers distinct advantages and disadvantages depending on the specific application and technical expertise. Consider factors like ease of use, scalability, pricing, and the specific AI capabilities required. A direct comparison is difficult without knowing the specific needs of a project, but the key is to evaluate which provider best aligns with your technical skills, budget, and project requirements.

Decision-Making Framework for API Selection

A structured approach to API selection ensures a well-informed choice. This framework prioritizes matching the API’s capabilities to project requirements.

- Define Project Requirements: Clearly articulate the specific AI tasks your project needs to accomplish (e.g., image classification, sentiment analysis, text summarization). This forms the basis for evaluating API suitability.

- Identify Key Performance Indicators (KPIs): Determine the metrics that will measure the success of the API integration (e.g., accuracy, speed, latency, cost). These KPIs guide the comparison of different options.

- Evaluate API Capabilities: Research different API providers, comparing their capabilities against your defined requirements and KPIs. Consider factors such as model accuracy, supported languages, and available documentation.

- Assess Scalability and Cost: Determine whether the API can handle the expected volume of requests and evaluate its pricing model to ensure it aligns with your budget. Consider both upfront costs and potential scaling expenses.

- Evaluate Ease of Integration: Assess the ease of integrating the API into your existing systems. Consider the availability of SDKs, documentation quality, and community support.

- Test and Iterate: Before committing to a specific API, conduct thorough testing to validate its performance and compatibility with your project. Iterative testing allows for adjustments based on observed performance.

API Selection Flowchart

The following flowchart visually represents the decision-making process:

[Imagine a flowchart here. The flowchart would start with a box labeled “Define Project Needs.” This would lead to a diamond-shaped decision box asking “Are the needs met by existing APIs?”. A “Yes” branch would lead to a box labeled “Select API and Test.” A “No” branch would lead to a box labeled “Research and Evaluate New APIs,” which would loop back to the “Are the needs met by existing APIs?” decision box. Finally, the “Select API and Test” box would lead to a terminal box labeled “API Integration Complete.”] The flowchart ensures a systematic approach, minimizing the risk of choosing an unsuitable API.

Data Handling and Transformation: API Integration For AI Tools

Integrating AI tools via APIs often involves navigating diverse data formats and handling potential inconsistencies. Successful integration hinges on efficient data transformation and robust error management. This section details strategies for ensuring seamless data flow between your systems and your chosen AI API.

Data transformation is crucial for bridging the gap between your internal data structures and the specific input requirements of the AI API. Many APIs expect data in a specific format, such as JSON or XML. If your data resides in a different format (e.g., CSV, Parquet), you’ll need to convert it. This involves using appropriate libraries and tools within your programming language to parse the source data and reconstruct it in the target format. Error handling is equally vital, as network issues or API limitations can disrupt the process.

Data Format Conversion Methods

Data format conversion typically involves several steps. First, the source data is parsed using libraries specific to its format (e.g., `csv` module in Python for CSV files, `xml.etree.ElementTree` for XML). Then, the parsed data is restructured to match the target format’s specifications. Libraries like `json` in Python are commonly used for JSON conversion. For example, converting a CSV file representing customer data to JSON might involve reading each row, creating a dictionary for each customer record, and then serializing these dictionaries into a JSON array. Complex transformations might require more advanced techniques, such as using schema mapping tools or custom scripts to handle data type conversions and data cleaning. For instance, converting dates from one format to another (e.g., MM/DD/YYYY to YYYY-MM-DD) requires explicit date parsing and formatting operations.

Error Handling and Exception Management

API calls are inherently prone to errors, such as network timeouts, API rate limits, or invalid input data. Robust error handling is paramount. It involves using `try-except` blocks (or similar constructs in other languages) to gracefully catch exceptions. When an error occurs, your code should handle it appropriately, such as logging the error, retrying the API call after a delay, or presenting a user-friendly error message. For example, a `requests.exceptions.RequestException` in Python might indicate a network problem, while a specific HTTP status code (e.g., 400 Bad Request) from the API indicates an issue with the input data. Proper error handling ensures your application remains stable and provides informative feedback in case of failures. Consider implementing exponential backoff strategies for retrying failed requests to avoid overwhelming the API.

Optimizing Data Transfer and Processing

Efficient data handling is essential for minimizing latency and maximizing throughput. Techniques like data compression (e.g., using gzip or zlib) can significantly reduce the size of data transferred, leading to faster API calls. Batching API requests – sending multiple requests simultaneously – can improve overall efficiency. For very large datasets, consider using techniques like data streaming to process data in chunks instead of loading the entire dataset into memory at once. This approach is particularly important when dealing with datasets that exceed available RAM. Furthermore, optimizing your data structures and algorithms can reduce processing time. For instance, using efficient data structures like NumPy arrays in Python can speed up numerical computations significantly.

Monitoring and Maintenance of API Integrations

Successfully integrating AI tools via APIs requires ongoing monitoring and maintenance to ensure consistent performance and reliability. Proactive strategies are crucial for identifying and resolving issues before they significantly impact your applications. This involves establishing robust monitoring systems, implementing effective troubleshooting procedures, and committing to regular updates.

Effective monitoring and maintenance are not merely reactive measures; they are proactive strategies that ensure the long-term health and efficiency of your AI-powered applications. By anticipating potential problems and establishing clear processes for addressing them, organizations can minimize downtime, optimize performance, and maximize the return on investment in their AI integrations.

API Performance Monitoring Strategies

Implementing a comprehensive monitoring system is paramount. This involves tracking key performance indicators (KPIs) such as response times, error rates, throughput, and resource utilization. Real-time dashboards visualizing these metrics allow for immediate identification of anomalies. Setting up alerts based on predefined thresholds (e.g., response time exceeding 500ms, error rate exceeding 1%) ensures timely intervention. Utilizing tools that provide detailed logs and tracing capabilities aids in pinpointing the root cause of performance bottlenecks. For example, a sudden spike in response times might indicate a surge in API requests, requiring scaling adjustments, or it might point to a specific code error within the API itself.

Troubleshooting and Resolving Integration Problems

Troubleshooting API integration problems often involves a systematic approach. Begin by analyzing the error messages returned by the API. These messages often provide clues about the nature and location of the problem. Leveraging debugging tools and logging mechanisms can provide more granular insights into the flow of data and execution of code. If the issue stems from data inconsistencies, carefully examine the data transformation processes to identify and correct any errors. For example, a mismatch in data formats between your application and the API could lead to integration failures. In more complex scenarios, involving multiple APIs or systems, a methodical approach of isolating the problem area through careful testing and analysis is crucial.

Importance of Regular Updates and Maintenance

Regular updates and maintenance are essential for ensuring the continued optimal performance of API integrations. API providers frequently release updates that include bug fixes, performance improvements, and new features. These updates are critical for maintaining security and compatibility. Ignoring updates can expose your application to vulnerabilities and lead to compatibility issues. Regular maintenance tasks may also include reviewing and updating the documentation, optimizing the data transformation processes, and conducting performance testing. Scheduling regular maintenance windows minimizes disruption and ensures that your AI integrations remain efficient and reliable. Failing to perform regular maintenance can lead to accumulated technical debt, resulting in more significant and costly issues in the long run.

Future Trends in API Integration for AI Tools

The landscape of AI API integration is rapidly evolving, driven by advancements in AI capabilities and the increasing demand for seamless integration of AI functionalities into various applications. This evolution is marked by a shift towards more efficient, secure, and versatile API designs and deployment strategies. The following sections explore key trends shaping the future of this critical area.

Emerging Trends in API Design and Development for AI Tools

Several key trends are reshaping how AI APIs are designed and developed. The focus is shifting towards more standardized and interoperable APIs, facilitating easier integration across different platforms and systems. This includes a move towards more granular APIs, offering specific functionalities instead of monolithic solutions, allowing developers to select only the necessary components. Another significant trend is the increased adoption of event-driven architectures, enabling real-time data processing and responsiveness. This approach allows for more efficient resource utilization and improved scalability. Finally, the use of OpenAPI specifications and other standardization efforts is improving the discoverability and usability of AI APIs, simplifying the integration process for developers.

Impact of Serverless Computing on API Integration

Serverless computing is poised to significantly impact AI API integration. By abstracting away server management, serverless functions allow developers to focus on the core logic of their AI applications. This approach leads to cost savings, improved scalability, and reduced operational overhead. For example, a company deploying a sentiment analysis API could leverage serverless functions to scale automatically based on demand, ensuring consistent performance even during peak usage periods. The inherent scalability and pay-as-you-go pricing model make serverless computing a particularly attractive option for AI applications, which often exhibit fluctuating demand. The reduced operational burden allows developers to iterate faster and focus on improving AI model performance rather than managing infrastructure.

Future Challenges and Opportunities in AI API Integration

While the future of AI API integration is bright, several challenges remain. Ensuring data privacy and security will continue to be a paramount concern, requiring robust security measures and compliance with relevant regulations. The increasing complexity of AI models and the need for specialized expertise will also pose challenges. However, these challenges also present significant opportunities. The development of tools and frameworks that simplify API integration, coupled with increased standardization efforts, will lower the barrier to entry for developers. The growing availability of pre-trained models and specialized AI APIs will empower developers to build more sophisticated AI applications with reduced development time and effort. Furthermore, advancements in explainable AI (XAI) will enhance the transparency and trustworthiness of AI APIs, fostering greater adoption and confidence in their use.

Successfully integrating AI tools via APIs offers immense potential for businesses of all sizes. From streamlining operations to unlocking innovative capabilities, the advantages are clear. However, careful planning, a robust security strategy, and ongoing maintenance are crucial for maximizing the benefits and mitigating potential risks. By understanding the nuances of API integration, and following best practices, organizations can harness the transformative power of AI to achieve their business objectives efficiently and securely.

Seamless API integration is crucial for leveraging the power of AI tools. The choice of cloud platform significantly impacts this process, as different providers offer varying levels of support and integration capabilities. A helpful resource for understanding these differences is this comparison of major cloud platforms: Comparing major cloud platforms. Ultimately, selecting the right platform directly influences the efficiency and scalability of your AI tool’s API integration.

Seamless API integration is crucial for deploying AI tools effectively. For organizations prioritizing data security and control, hosting these AI applications within a Private cloud environment offers significant advantages. This approach ensures data remains within your organization’s boundaries while still allowing for efficient API-driven access and management of your AI functionalities.