Deep learning platforms are revolutionizing how we approach complex data analysis and artificial intelligence. These powerful tools provide the infrastructure and resources necessary to build, train, and deploy sophisticated deep learning models, streamlining the entire process from data preparation to model deployment. Understanding the nuances of different platforms, their capabilities, and selection criteria is crucial for leveraging the full potential of deep learning in various applications.

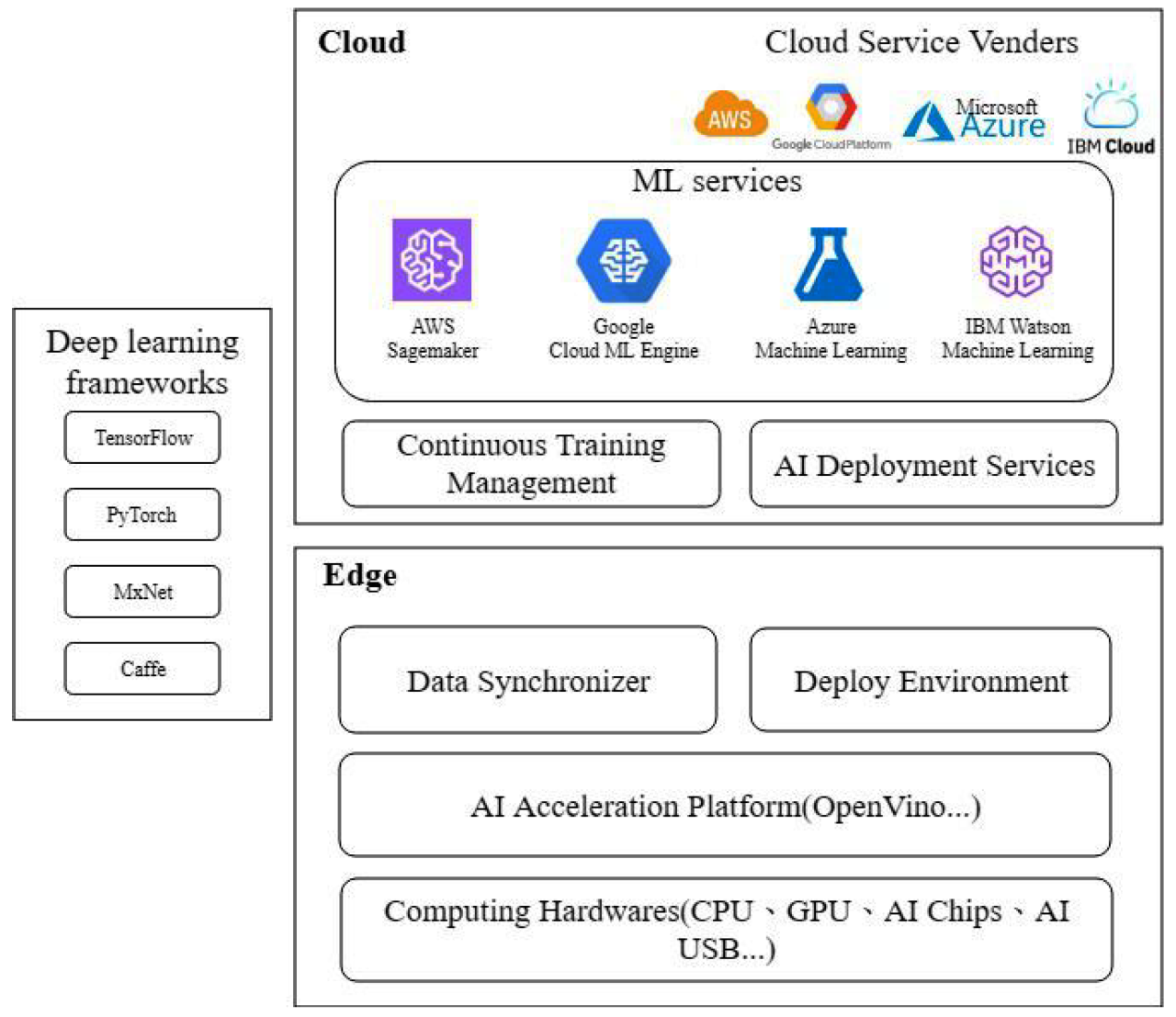

This guide delves into the core components of deep learning platforms, exploring various deployment models, key features, and popular choices such as TensorFlow, PyTorch, and cloud-based solutions like AWS SageMaker. We will also examine crucial aspects like data management, model training optimization, deployment strategies, and essential security considerations.

Types of Deep Learning Platforms

Deep learning platforms provide the necessary tools and infrastructure for building, training, and deploying deep learning models. The choice of platform significantly impacts the efficiency, scalability, and overall success of a deep learning project. Understanding the different types of platforms available is crucial for making informed decisions.

Deep learning platforms can be categorized in several ways, with deployment model being a key differentiator. This impacts factors such as cost, control, and security. Furthermore, the distinction between open-source and commercial platforms influences accessibility, support, and feature sets.

Deployment Models: Cloud, On-Premise, and Hybrid

The location where a deep learning platform is deployed significantly impacts its accessibility, cost, and security. Cloud-based platforms offer scalability and accessibility, but may have data security concerns. On-premise solutions offer greater control but require significant investment in infrastructure. Hybrid models combine the benefits of both, offering flexibility.

- Cloud-based platforms: These platforms, such as Amazon SageMaker, Google Cloud AI Platform, and Microsoft Azure Machine Learning, offer scalable computing resources and pre-built tools for deep learning. They are readily accessible and require minimal upfront investment in hardware. However, reliance on a third-party provider introduces concerns about data security and vendor lock-in.

- On-premise platforms: These platforms are deployed within an organization’s own data center, offering greater control over data security and infrastructure. Examples include setting up a cluster of machines using TensorFlow or PyTorch. However, on-premise solutions require significant upfront investment in hardware and IT expertise for maintenance and management. Scalability can also be a challenge compared to cloud options.

- Hybrid platforms: These platforms combine aspects of both cloud and on-premise deployments. For instance, an organization might train models on a cloud platform for its scalability and then deploy them on-premise for security reasons, or vice-versa. This approach provides flexibility and allows organizations to leverage the strengths of each model while mitigating their weaknesses.

Open-Source vs. Commercial Deep Learning Platforms

The choice between open-source and commercial platforms depends on factors such as budget, technical expertise, and the level of support required. Open-source platforms offer flexibility and customization, but may lack enterprise-grade support. Commercial platforms provide robust support and features, but often come with a higher cost.

- Open-source platforms: Examples include TensorFlow, PyTorch, and Keras. These platforms offer a high degree of flexibility and customization, allowing developers to tailor their solutions to specific needs. They also benefit from a large and active community, providing access to a wealth of resources and support. However, the lack of dedicated support can be a significant drawback for organizations lacking in-house expertise. Deployment and maintenance can also require significant effort.

- Commercial platforms: Examples include Amazon SageMaker, Google Cloud AI Platform, and Dataiku. These platforms provide comprehensive features, robust support, and enterprise-grade security. They often include pre-built tools and integrations, simplifying the development and deployment process. However, they typically come with a higher cost and may involve vendor lock-in.

Strengths and Weaknesses of Different Platform Types

Each platform type presents a unique set of advantages and disadvantages. The optimal choice depends heavily on the specific needs and resources of the project.

| Platform Type | Strengths | Weaknesses |

|---|---|---|

| Cloud-based | Scalability, accessibility, cost-effectiveness (pay-as-you-go), readily available tools and resources | Data security concerns, vendor lock-in, potential latency issues |

| On-premise | Greater control over data security and infrastructure, customization options | High upfront investment, requires dedicated IT expertise, scalability challenges |

| Hybrid | Flexibility, combines strengths of cloud and on-premise, improved security | Increased complexity in management and integration |

| Open-source | Flexibility, customization, large community support, cost-effective | Lack of dedicated support, potential for deployment and maintenance challenges |

| Commercial | Robust support, enterprise-grade security, pre-built tools and integrations | Higher cost, potential vendor lock-in |

Key Features and Capabilities

Deep learning platforms offer a range of features crucial for successful model development and deployment. Beyond simply providing the computational horsepower needed for training, these platforms incorporate tools and functionalities that streamline the entire deep learning workflow, from data preparation to model monitoring. The efficiency and effectiveness of these features directly impact the speed and accuracy of model development, ultimately determining the success of a deep learning project.

The performance and scalability of a deep learning platform are paramount. Deep learning models, especially large ones, require substantial computational resources. A platform’s ability to handle massive datasets and complex models efficiently, potentially distributing the workload across multiple GPUs or even cloud-based infrastructure, directly translates to faster training times and the ability to tackle larger, more intricate problems. For example, a platform capable of scaling to hundreds of GPUs allows for training models that would be impractical on a single machine, enabling breakthroughs in areas like natural language processing and image recognition. Similarly, performance optimizations, such as optimized linear algebra libraries and efficient memory management, are crucial for minimizing training time and maximizing resource utilization.

Scalability and Performance in Deep Learning

Scalability refers to a platform’s ability to handle increasing workloads without significant performance degradation. This is achieved through techniques like distributed training, where the model training process is split across multiple machines, and efficient resource allocation. Performance, on the other hand, focuses on the speed and efficiency of the platform’s operations, including data loading, model training, and inference. Factors influencing performance include the underlying hardware, software optimizations, and the platform’s architecture. A platform’s ability to scale efficiently allows researchers and developers to tackle increasingly complex problems and handle larger datasets, leading to more accurate and powerful models. For instance, training a large language model with billions of parameters requires a highly scalable platform capable of distributing the training workload across numerous GPUs and managing the massive amounts of data involved.

Advanced Features: Model Versioning and Automated Model Training

Modern deep learning platforms incorporate advanced features that significantly enhance the development workflow. Model versioning allows developers to track different versions of their models, compare their performance, and easily revert to previous versions if necessary. This is particularly important in collaborative projects and when iterating on model designs. Automated model training streamlines the often tedious and time-consuming process of hyperparameter tuning and model selection. Automated model training uses algorithms to systematically explore different hyperparameter settings and select the best-performing model. This reduces manual effort and allows developers to focus on other aspects of the project. For example, Google’s AutoML provides automated machine learning capabilities, reducing the need for extensive manual intervention in model development.

Integrated Development Environments (IDEs)

Integrated Development Environments (IDEs) play a vital role in deep learning platforms by providing a unified environment for coding, debugging, and managing projects. IDEs offer features such as code completion, debugging tools, and integrated version control, simplifying the development process and improving productivity. Many deep learning platforms include or integrate with popular IDEs like VS Code or Jupyter Notebooks, providing a familiar and user-friendly interface for developers. The IDE’s integration with the platform’s other functionalities, such as model visualization and deployment tools, further streamlines the workflow, allowing developers to manage the entire deep learning lifecycle within a single environment. A well-integrated IDE improves collaboration among team members, reduces errors, and facilitates efficient model development and deployment.

Deep Learning Platform Selection Criteria

Choosing the right deep learning platform is crucial for the success of any project. The selection process requires careful consideration of various factors, balancing technical capabilities with project constraints and team expertise. A poorly chosen platform can lead to increased development time, higher costs, and ultimately, project failure.

Selecting a deep learning platform involves a multifaceted evaluation process. Several key aspects must be carefully weighed to ensure alignment with project needs and available resources. This includes analyzing the project’s requirements, assessing the capabilities of different platforms, and evaluating the team’s skillset.

Project Requirements and Platform Capabilities

Project requirements significantly influence platform selection. Data size, model complexity, and budget constraints all play a vital role. For instance, projects involving massive datasets (terabytes or petabytes) necessitate platforms with robust data handling capabilities and scalability, such as those offered by cloud-based solutions like AWS SageMaker or Google Cloud AI Platform. Conversely, smaller projects with limited data might find suitable solutions in more lightweight platforms or even open-source frameworks like TensorFlow or PyTorch running on local hardware. Similarly, complex models requiring significant computational power demand platforms with powerful GPUs and optimized parallel processing capabilities, while simpler models may run efficiently on less powerful hardware. Budgetary limitations will naturally restrict the choices, pushing towards cost-effective options, potentially including open-source solutions and less expensive cloud instances. For example, a research project with limited funding might opt for a free or open-source platform like TensorFlow, whereas a large-scale commercial deployment might necessitate the higher cost but greater scalability of a cloud-based solution.

Team Skills and Experience

The skills and experience of the development team are paramount in platform selection. Choosing a platform that aligns with the team’s expertise ensures efficient development and minimizes the learning curve. A team proficient in Python and familiar with TensorFlow will likely find it easier to develop and deploy models using TensorFlow-based platforms. Conversely, a team with limited experience in a specific platform might find it beneficial to choose a platform with extensive documentation, readily available community support, and a user-friendly interface. For example, a team with strong experience in using the Keras API might find it advantageous to select a platform that seamlessly integrates with Keras, even if it means potentially sacrificing some other functionalities. Lack of internal expertise might necessitate choosing a platform with readily available training resources and community support, mitigating potential delays and challenges associated with onboarding new technologies.

Scalability and Deployment Considerations, Deep learning platforms

The chosen platform must be capable of scaling to accommodate future growth and changes in project requirements. Consider the need for model retraining, updating, and deployment to various environments (e.g., cloud, on-premise, edge devices). Platforms offering robust model deployment options and seamless integration with various infrastructure environments are crucial for long-term project success. For example, a platform with built-in model versioning and automated deployment capabilities can streamline the process of updating models and deploying them to different environments, ensuring efficient maintenance and updates. A platform lacking these features may lead to increased deployment complexities and potential bottlenecks in the long run.

Data Management and Preprocessing

Deep learning models are data-hungry beasts. Their performance is directly tied to the quality and quantity of the data they are trained on. Effective data management and preprocessing are therefore crucial steps in any successful deep learning project, especially when dealing with the massive datasets often involved. Deep learning platforms provide a range of tools and functionalities to streamline this process, making it more efficient and less error-prone.

Deep learning platforms address the challenges of handling large datasets through several strategies. They often leverage distributed computing frameworks like Apache Spark or Hadoop to distribute data processing across multiple machines, significantly accelerating the process. Furthermore, they employ techniques like data sharding and parallel processing to manage and manipulate datasets that exceed the capacity of a single machine’s memory. Data is frequently stored in specialized formats like Parquet or ORC, which are optimized for efficient querying and analysis within these distributed environments. For example, a platform might use Spark to distribute a terabyte-sized image dataset across a cluster of servers, enabling parallel preprocessing steps such as image resizing and normalization.

Data Cleaning and Transformation

Data cleaning involves identifying and correcting or removing inaccurate, incomplete, irrelevant, or duplicate data points. Common techniques include handling missing values (imputation or removal), outlier detection and treatment, and noise reduction. Data transformation involves converting data into a suitable format for deep learning models. This might include scaling features to a specific range (e.g., standardization or normalization), encoding categorical variables (e.g., one-hot encoding or label encoding), or transforming skewed distributions (e.g., logarithmic transformation). For instance, a platform might automatically detect and impute missing values in a tabular dataset using techniques like k-Nearest Neighbors imputation before feeding the data to a model. Similarly, it might normalize pixel values in images to a range between 0 and 1 to improve model training stability.

Feature Engineering

Feature engineering is the process of creating new features from existing ones to improve model performance. This involves selecting relevant features, transforming existing features, and creating entirely new features that capture relevant information. Techniques include principal component analysis (PCA) for dimensionality reduction, feature scaling, and the creation of interaction terms or polynomial features. For example, in a customer churn prediction model, a platform might automatically engineer a new feature representing the average monthly spending of a customer based on their transaction history. This new feature, derived from existing transaction data, can be highly informative for the model.

Data Integration from Various Sources

Deep learning projects often involve data from multiple sources, such as databases, cloud storage, APIs, and streaming data platforms. Deep learning platforms provide mechanisms to integrate data from diverse sources into a unified workflow. This typically involves using connectors and APIs to access data from various sources, transforming the data into a consistent format, and loading it into a central repository or data lake. For example, a platform might allow users to seamlessly connect to a relational database, extract relevant customer data, join it with sensor data from a cloud storage service, and then prepare this combined dataset for training a recommendation engine. The platform might handle data type conversions, schema mapping, and data cleaning as part of this integration process.

Model Deployment and Monitoring

Deploying a trained deep learning model into a production environment marks a crucial transition from experimentation to real-world application. This process involves several steps, from selecting the appropriate deployment strategy to establishing robust monitoring mechanisms to ensure the model continues to perform as expected. Successful deployment relies on careful planning and a comprehensive understanding of the production environment’s demands.

The deployment process typically involves packaging the trained model, along with any necessary dependencies, into a deployable format. This might involve creating a containerized application using Docker, or integrating the model into a serverless architecture using cloud platforms like AWS Lambda or Google Cloud Functions. The choice of deployment method depends heavily on factors like scalability requirements, resource constraints, and the specific deep learning platform used during training. Once deployed, the model begins processing real-world data, generating predictions that are integrated into the application’s workflow.

Model Deployment Strategies

Several strategies exist for deploying deep learning models, each with its own advantages and disadvantages. A common approach is to deploy the model as a REST API, allowing other applications to easily access its prediction capabilities. Alternatively, models can be embedded directly into applications, offering faster response times but potentially reducing scalability. Edge deployment, placing the model directly on devices like smartphones or IoT sensors, is also becoming increasingly popular, reducing latency and enabling offline functionality. The optimal strategy depends on factors such as the model’s size, the required latency, and the overall system architecture.

Model Monitoring and Maintenance

Continuous monitoring is essential to ensure the model maintains its accuracy and performance over time. This involves tracking key metrics such as precision, recall, F1-score, and AUC, comparing the model’s performance against a baseline, and identifying potential issues such as concept drift (where the relationship between input features and target variables changes over time) or data quality degradation. Regular monitoring allows for proactive intervention, preventing performance degradation and maintaining the model’s value. For example, a fraud detection model might see a decline in performance if the tactics used by fraudsters change, requiring retraining with updated data.

Model Retraining and Updates

Deep learning models are not static entities. As new data becomes available or the underlying patterns in the data change, the model’s performance can degrade. Therefore, a crucial aspect of model management is implementing a strategy for retraining and updating the model. This involves periodically retraining the model with new data, incorporating feedback from the monitoring process, and deploying the updated model to production. The frequency of retraining depends on factors such as the rate of data change and the model’s sensitivity to concept drift. A well-defined retraining pipeline ensures the model remains relevant and effective over its lifespan. For instance, a recommendation system might require frequent retraining to incorporate new user interactions and product information.

Ultimately, the choice of deep learning platform depends heavily on specific project needs and team expertise. By carefully considering factors like data scale, model complexity, budget constraints, and the development team’s skillset, organizations can select the optimal platform to achieve their deep learning objectives efficiently and effectively. Mastering these platforms unlocks the potential to solve complex problems and drive innovation across numerous industries.

Deep learning platforms are increasingly leveraging cloud infrastructure for scalability and efficiency. This often involves adopting principles of Cloud-native development , allowing for greater agility and resource optimization. As a result, the deployment and management of these sophisticated AI models become significantly streamlined, leading to improved performance and reduced operational overhead for deep learning platforms.

Deep learning platforms are increasingly reliant on cloud infrastructure for their computational needs. However, this reliance introduces significant vulnerabilities, as highlighted by the numerous security challenges in the cloud. Therefore, robust security measures are crucial for protecting sensitive data and model integrity within these platforms, ensuring both the effectiveness and trustworthiness of deep learning applications.