Predictive modeling tools are revolutionizing how we approach decision-making across diverse sectors. These powerful instruments leverage historical data to forecast future trends, enabling proactive strategies and informed choices. From predicting customer behavior to optimizing resource allocation, predictive modeling offers a data-driven approach to problem-solving, impacting everything from finance and healthcare to marketing and beyond. Understanding their capabilities is crucial in today’s data-rich world.

This guide delves into the core concepts of predictive modeling, exploring various techniques, data requirements, and ethical considerations. We’ll examine popular algorithms, model evaluation methods, and deployment strategies, providing a comprehensive overview for both beginners and experienced professionals. Real-world examples and case studies illustrate the practical applications and potential impact of these tools.

Defining Predictive Modeling Tools

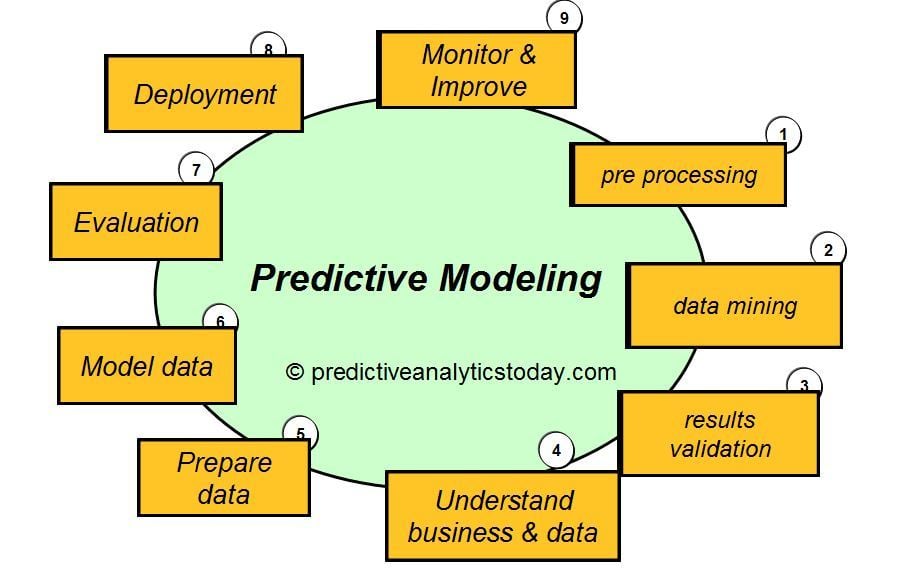

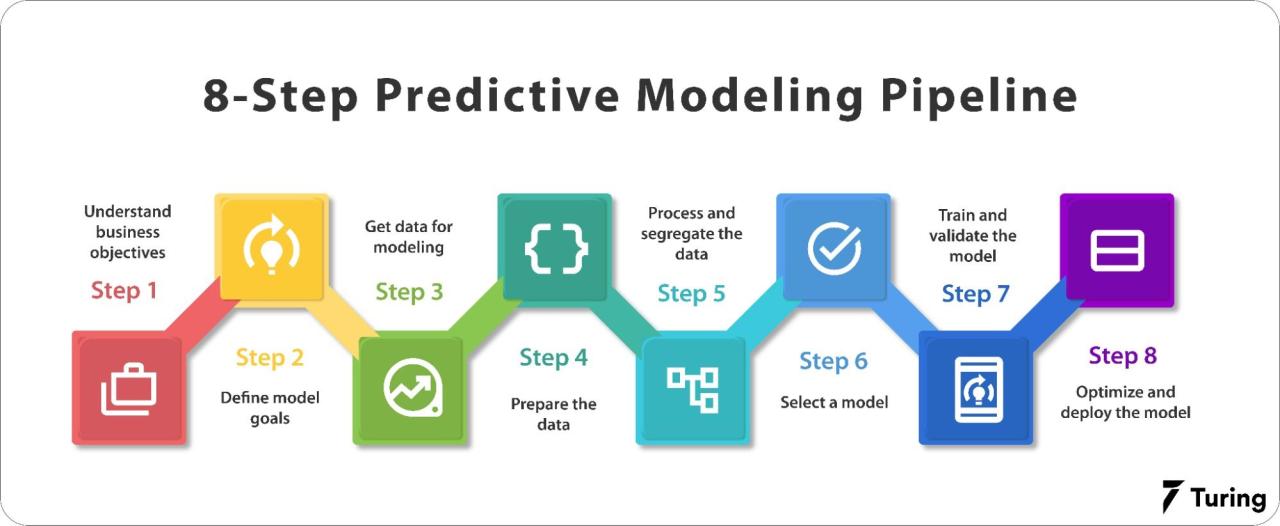

Predictive modeling tools are software applications and platforms designed to analyze historical data and build statistical models that forecast future outcomes. These tools leverage various algorithms and techniques to identify patterns and relationships within data, enabling businesses and researchers to make informed decisions and anticipate future trends. They are essential in various fields, from finance and marketing to healthcare and manufacturing, where understanding future possibilities is crucial for strategic planning and resource allocation.

Predictive modeling tools encompass a wide range of functionalities, from data preprocessing and feature engineering to model building, evaluation, and deployment. They often incorporate user-friendly interfaces, allowing users with varying levels of technical expertise to utilize powerful analytical capabilities. The selection of the appropriate tool depends heavily on the specific needs of the project, including the size and type of data, the complexity of the predictive task, and the technical skills of the users.

Types of Predictive Modeling Tools

The market offers a diverse range of predictive modeling tools, each with its strengths and weaknesses. These tools can be broadly categorized based on their functionalities, target users, and underlying algorithms. Some are designed for specialized tasks, while others provide more comprehensive capabilities.

- Statistical Software Packages: Tools like R and SPSS offer extensive statistical capabilities and allow for highly customized model building. They are particularly suited for users with strong statistical backgrounds and require coding skills. These packages offer a vast library of algorithms and functions, allowing for detailed control over the modeling process. For example, a researcher might use R to build a complex regression model to predict customer churn based on demographic and behavioral data.

- Machine Learning Platforms: Platforms such as Azure Machine Learning, AWS SageMaker, and Google Cloud AI Platform provide cloud-based environments for building, training, and deploying machine learning models. They often offer pre-built algorithms and automated model selection features, simplifying the process for users with less technical expertise. A marketing team might utilize such a platform to create a model predicting customer response to a new advertising campaign.

- Business Intelligence (BI) Tools with Predictive Capabilities: Many BI platforms, such as Tableau and Power BI, now incorporate predictive modeling features. These tools often provide user-friendly interfaces and integrate seamlessly with existing data warehouses. A sales manager could leverage the predictive capabilities within a BI tool to forecast future sales based on historical data and market trends.

- Specialized Predictive Modeling Software: Some tools are designed for specific industries or tasks, such as fraud detection, credit scoring, or risk management. These tools often incorporate domain-specific algorithms and features tailored to the particular application. For instance, a financial institution might use a specialized tool designed for credit risk assessment to evaluate loan applications and predict the likelihood of default.

Key Features and Functionalities

Regardless of their specific type, most predictive modeling tools share several key features and functionalities. These features contribute to their overall effectiveness and usability.

- Data Import and Preprocessing: The ability to import data from various sources (databases, spreadsheets, APIs) and perform necessary cleaning, transformation, and feature engineering tasks is crucial. This often includes handling missing values, outlier detection, and data normalization.

- Model Building and Training: Tools typically offer a range of algorithms (regression, classification, clustering, etc.) to build predictive models. The ability to train models on historical data and evaluate their performance is essential.

- Model Evaluation and Selection: Tools provide metrics (accuracy, precision, recall, AUC) to assess the performance of different models. This allows users to select the best model for their specific needs.

- Model Deployment and Monitoring: The ability to deploy models into production environments (web applications, databases) and monitor their performance over time is crucial for ensuring accuracy and reliability.

- Visualization and Reporting: Effective visualization tools allow users to understand the insights generated by the models and communicate their findings to stakeholders.

Data Requirements for Predictive Modeling

Predictive modeling relies heavily on the quality and quantity of the data used to train the model. The success of any predictive model is directly tied to the characteristics and preparation of this input data. Insufficient or poorly prepared data will inevitably lead to inaccurate and unreliable predictions. Understanding the data requirements is therefore crucial in the initial stages of model development.

The type of data required depends heavily on the specific predictive modeling task. However, some data types are more commonly used than others. Generally, a combination of numerical, categorical, and potentially temporal data is needed to create a robust model. The preprocessing steps required will also vary depending on the nature of this data.

Essential Data Types for Predictive Modeling

Numerical data represents quantities and can be continuous (e.g., temperature, weight) or discrete (e.g., number of customers, count of events). Categorical data represents qualities or characteristics and can be nominal (e.g., color, gender) or ordinal (e.g., education level, customer satisfaction rating). Temporal data represents time-related information (e.g., date, time). For instance, in a model predicting customer churn, numerical data might include the customer’s average monthly spending, categorical data might include their location and subscription type, and temporal data might include the date of their initial subscription and the frequency of their logins. The effective use of all these data types allows for a more comprehensive and accurate prediction.

Data Preprocessing and Cleaning for Predictive Models

Data preprocessing is a critical step that significantly impacts the accuracy and reliability of predictive models. This process involves transforming raw data into a format suitable for model training. Key steps include: handling missing values (e.g., imputation with mean, median, or mode; removal of rows or columns with excessive missing data), outlier detection and treatment (e.g., removal, transformation, or capping), data transformation (e.g., standardization, normalization), and feature engineering (creating new features from existing ones to improve model performance). For example, if a dataset contains missing values for income, we might impute the missing values using the average income of similar customers. Outliers, such as unusually high transaction values, might require investigation and either removal or transformation to mitigate their influence on the model.

Data Preparation Workflow for Customer Churn Prediction

Let’s consider a scenario where we want to build a predictive model to identify customers at high risk of churning (canceling their subscription). A typical data preparation workflow might look like this:

1. Data Collection: Gather data from various sources, including customer databases, transaction logs, and customer support interactions. This data might include demographic information, subscription details, usage patterns, and customer service interactions.

2. Data Cleaning: Handle missing values, such as imputing missing values for average monthly spending using the median value for similar customer segments. Address inconsistencies in data formats (e.g., standardizing date formats).

3. Data Transformation: Normalize numerical features (e.g., average monthly spending) to ensure they have a similar scale. Convert categorical features (e.g., location) into numerical representations using techniques like one-hot encoding.

4. Feature Engineering: Create new features based on existing data. For instance, calculate the customer’s average monthly login frequency or the number of customer service tickets raised.

5. Data Splitting: Divide the prepared dataset into training, validation, and testing sets to evaluate model performance and prevent overfitting. A common split might be 70% for training, 15% for validation, and 15% for testing.

This workflow ensures that the data is properly prepared for training a robust and accurate customer churn prediction model. The specific steps and techniques employed will depend on the characteristics of the data and the chosen predictive modeling technique.

Model Evaluation and Selection

Building a predictive model is only half the battle; effectively evaluating and selecting the best model for a given task is equally crucial. This involves assessing model performance using various metrics and employing techniques to optimize model parameters and ultimately choose the model that best aligns with business objectives. The process is iterative, often requiring experimentation and refinement.

Model performance evaluation hinges on understanding various metrics that quantify a model’s predictive capabilities. These metrics provide a quantitative assessment of how well the model generalizes to unseen data. Choosing the appropriate metric depends heavily on the specific problem and the relative importance of different types of errors.

Performance Metrics

Several metrics are commonly used to evaluate predictive models. These metrics provide different perspectives on model accuracy, considering the trade-off between correctly identifying positive and negative instances. Misclassifications can be costly depending on the context, making the choice of metric critical.

- Accuracy: The ratio of correctly classified instances to the total number of instances. While simple to understand, accuracy can be misleading when dealing with imbalanced datasets (where one class significantly outnumbers the other).

- Precision: The proportion of correctly predicted positive instances among all instances predicted as positive. High precision means a low rate of false positives.

- Recall (Sensitivity): The proportion of correctly predicted positive instances among all actual positive instances. High recall means a low rate of false negatives.

- F1-Score: The harmonic mean of precision and recall, providing a balanced measure of both. It’s particularly useful when dealing with imbalanced datasets.

- AUC (Area Under the ROC Curve): Measures the ability of the classifier to distinguish between classes. A higher AUC indicates better discrimination. The ROC curve plots the true positive rate against the false positive rate at various threshold settings.

For example, in a fraud detection system, high recall is crucial to minimize missing fraudulent transactions (false negatives), even if it means accepting a higher rate of false positives (flagging legitimate transactions as fraudulent). Conversely, in a spam filter, high precision is preferred to minimize legitimate emails being marked as spam (false positives), even if it means missing some spam emails (false negatives).

Model Selection and Hyperparameter Tuning

Model selection involves choosing the best algorithm (e.g., logistic regression, support vector machine, random forest) from a set of candidates. Hyperparameter tuning is the process of optimizing the algorithm’s parameters to achieve the best performance. This is often an iterative process, requiring experimentation with different combinations of parameters.

Techniques such as grid search, random search, and Bayesian optimization are commonly employed for hyperparameter tuning. Grid search systematically tries all combinations of specified hyperparameters, while random search randomly samples from the hyperparameter space. Bayesian optimization uses a probabilistic model to guide the search, making it more efficient for high-dimensional hyperparameter spaces.

Optimal Model Selection Procedure

Selecting the optimal model involves a systematic procedure. First, we train several candidate models using different algorithms and hyperparameter settings. Then, we evaluate each model’s performance on a held-out validation set using appropriate metrics (e.g., AUC, F1-score, precision, recall depending on the business context). Finally, we select the model that best balances performance metrics with business objectives.

For instance, a model with slightly lower accuracy but significantly higher recall might be preferred in a medical diagnosis scenario where missing a positive case (false negative) is far more costly than a false positive. The final decision involves a trade-off between model performance and the cost associated with different types of errors. This often requires careful consideration of the specific business context and the relative importance of different types of errors.

Model Deployment and Monitoring

Deploying a predictive model successfully requires careful planning and execution to ensure the model transitions smoothly from development to a live, production environment where it can provide valuable insights and predictions. Effective monitoring is crucial for maintaining model accuracy and identifying potential problems that may arise over time.

Model deployment involves several key steps, each requiring attention to detail to ensure a successful transition and ongoing performance. Failure at any stage can impact the overall utility and reliability of the predictive model.

Deployment Steps

The process of deploying a predictive model into a production environment typically involves several distinct phases. These phases, while iterative, must be executed thoroughly to minimize risk and maximize the model’s impact.

- Model Packaging: This involves bundling the trained model, along with any necessary dependencies (libraries, configuration files), into a deployable unit. This might be a Docker container, a zip file, or a platform-specific package.

- Infrastructure Setup: Setting up the necessary computing infrastructure (servers, cloud services) to host the deployed model. Considerations include scalability, security, and resource allocation.

- Integration with Existing Systems: Connecting the deployed model to existing data sources and applications to enable seamless data flow and prediction generation. This might involve APIs, databases, or message queues.

- Testing and Validation: Rigorous testing of the deployed model in the production environment to ensure it functions correctly and produces accurate predictions. This includes load testing and stress testing to evaluate performance under various conditions.

- Deployment Rollout: Gradually releasing the model to production, perhaps using A/B testing or a phased rollout to minimize disruption and allow for monitoring of performance in a real-world setting. A canary deployment, releasing the model to a small subset of users first, is a good example of a phased rollout.

Model Performance Monitoring, Predictive modeling tools

Continuous monitoring of a deployed model’s performance is essential to identify and address potential issues before they significantly impact its accuracy or reliability. This involves tracking key metrics and establishing alerts to notify stakeholders of any anomalies.

- Accuracy Metrics: Tracking metrics such as precision, recall, F1-score, AUC (Area Under the ROC Curve), and others relevant to the specific model and its task. Regularly comparing these metrics to baseline values established during model development helps to detect performance degradation.

- Data Drift Detection: Monitoring the characteristics of the input data over time to detect any significant changes (data drift) that might affect model accuracy. Techniques like concept drift detection algorithms can help identify these changes early on.

- Latency and Throughput Monitoring: Measuring the time it takes for the model to generate predictions (latency) and the number of predictions it can generate per unit of time (throughput). This is critical for ensuring the model meets performance requirements.

- Error Analysis: Regularly reviewing prediction errors to identify patterns or systematic biases. This can provide valuable insights into areas where the model needs improvement or retraining.

- Alerting System: Implementing an alerting system to notify stakeholders of any significant deviations from expected performance or potential issues. This could involve email notifications, dashboards, or other methods.

Model Maintenance and Updates

Maintaining and updating a deployed model is an ongoing process that ensures its continued accuracy and relevance. This involves regular evaluation and retraining as needed.

- Regular Retraining: Periodically retraining the model with new data to account for changes in the underlying patterns and relationships. The frequency of retraining depends on factors such as the rate of data drift and the model’s sensitivity to changes in the data.

- Model Versioning: Maintaining a version history of the model, allowing for easy rollback to previous versions if necessary. This is crucial for managing changes and ensuring stability.

- A/B Testing: Using A/B testing to compare the performance of different model versions or updates before fully deploying them to production. This allows for controlled evaluation and minimizes the risk of deploying a less effective model.

- Documentation: Maintaining comprehensive documentation of the model, its training process, and its deployment environment. This facilitates troubleshooting, maintenance, and future updates.

- Monitoring Tools and Dashboards: Utilizing dedicated monitoring tools and dashboards to visualize key performance indicators (KPIs) and facilitate timely identification of potential problems. This provides a centralized view of model health and performance.

Case Studies of Predictive Modeling Applications

Predictive modeling has proven its value across numerous sectors, delivering significant improvements in efficiency, profitability, and decision-making. The following case studies illustrate the diverse applications and impactful results achieved through the strategic use of predictive modeling techniques. These examples highlight the versatility and power of this analytical approach.

Examples of Predictive Modeling Success Across Industries

The application of predictive modeling varies greatly depending on the specific needs and data available within each industry. However, common themes emerge, such as improved forecasting, risk mitigation, and personalized experiences. The table below presents a concise overview of successful applications.

| Industry | Application | Results |

|---|---|---|

| Finance | Credit risk assessment (predicting loan defaults) | Reduced loan defaults by 15%, leading to a significant increase in profitability for a major bank. This was achieved by using a combination of logistic regression and machine learning algorithms to analyze historical loan data, including borrower demographics, credit history, and financial information. The model accurately identified high-risk borrowers, allowing the bank to adjust lending policies and reduce losses. |

| Healthcare | Predicting patient readmission rates | A large hospital system implemented a predictive model to identify patients at high risk of readmission within 30 days of discharge. This allowed for proactive interventions, such as enhanced discharge planning and follow-up appointments, resulting in a 20% reduction in readmission rates and improved patient outcomes. The model incorporated factors such as patient demographics, medical history, and treatment details. |

| Marketing | Customer churn prediction (identifying customers likely to cancel service) | A telecommunications company used predictive modeling to identify customers at high risk of churning. This enabled targeted retention campaigns, offering personalized incentives and improving customer service to those most likely to cancel. The result was a 10% decrease in churn rate, representing substantial cost savings and increased customer loyalty. The model considered factors such as usage patterns, billing history, and customer service interactions. |

Software and Platforms for Predictive Modeling: Predictive Modeling Tools

Predictive modeling relies heavily on the software and platforms used to build, train, and deploy models. The choice of platform significantly impacts efficiency, model complexity, and overall project success. Different platforms cater to varying skill levels, project scales, and specific modeling needs. This section examines several popular options, comparing their strengths and weaknesses.

Popular Predictive Modeling Software and Platforms

A wide array of software and platforms support predictive modeling, ranging from open-source tools ideal for learning and experimentation to commercial solutions offering advanced features and scalability. The selection depends on factors like budget, technical expertise, and the specific requirements of the predictive modeling task.

- R: A powerful open-source programming language and environment for statistical computing and graphics. It boasts extensive libraries (like caret, randomForest, and glmnet) for various statistical and machine learning techniques. R is highly flexible and customizable but requires programming skills.

- Python (with scikit-learn, TensorFlow, PyTorch): Python, with its rich ecosystem of libraries, is another popular choice. Scikit-learn provides a user-friendly interface for various machine learning algorithms. TensorFlow and PyTorch are powerful deep learning frameworks suitable for complex models. Python offers a good balance of ease of use and powerful capabilities.

- SAS: A comprehensive commercial software suite offering a wide range of statistical and predictive modeling tools. It’s known for its robust capabilities, especially in handling large datasets and complex analyses. However, it comes with a significant cost and steeper learning curve.

- SPSS Modeler: A user-friendly commercial platform with a visual interface, making it accessible to users with limited programming experience. It offers a range of statistical and machine learning algorithms, making it suitable for various predictive modeling tasks. While less flexible than R or Python, its ease of use is a major advantage.

- RapidMiner: A visual workflow-based platform for data science, including predictive modeling. It offers a user-friendly interface with drag-and-drop functionality, making it suitable for both beginners and experienced users. It integrates various data sources and algorithms, simplifying the entire predictive modeling process.

- Azure Machine Learning: A cloud-based platform from Microsoft offering a comprehensive suite of tools for building, training, and deploying machine learning models. It supports various algorithms and frameworks, including Python and R, and offers scalability for large-scale projects. Its cloud-based nature allows for easy collaboration and resource management.

- AWS SageMaker: A similar cloud-based platform from Amazon Web Services, providing similar functionalities to Azure Machine Learning. It also supports various algorithms and frameworks and offers scalability for large projects. The choice between AWS SageMaker and Azure Machine Learning often comes down to existing cloud infrastructure and preferences.

Comparison of Features and Capabilities

The platforms listed above differ significantly in their features and capabilities. R and Python offer maximum flexibility and control but require programming expertise. SAS and SPSS Modeler provide user-friendly interfaces but may lack the flexibility of open-source options. Cloud-based platforms like Azure Machine Learning and AWS SageMaker offer scalability and integration with other cloud services. The choice depends on the specific needs of the project and the user’s technical skills. For example, a small project with limited resources might benefit from R or Python, while a large enterprise might prefer a commercial solution like SAS or a cloud-based platform for scalability.

Advantages and Disadvantages of Each Platform

| Platform | Advantages | Disadvantages |

|---|---|---|

| R | Highly flexible, extensive libraries, open-source, large community support | Steep learning curve, requires programming skills |

| Python | Versatile, extensive libraries, large community support, good balance of ease of use and power | Requires programming skills (although less steep than R) |

| SAS | Robust, handles large datasets well, comprehensive features | Expensive, steep learning curve |

| SPSS Modeler | User-friendly interface, good for beginners, range of algorithms | Less flexible than R or Python, can be expensive |

| RapidMiner | Visual workflow, user-friendly, integrates various data sources | Can be less powerful than dedicated programming languages |

| Azure Machine Learning | Scalable, cloud-based, integrates with other Azure services | Requires familiarity with cloud computing |

| AWS SageMaker | Scalable, cloud-based, integrates with other AWS services | Requires familiarity with cloud computing |

In conclusion, predictive modeling tools are invaluable assets for organizations seeking to harness the power of data for informed decision-making. By understanding the diverse techniques, ethical implications, and practical applications, businesses can leverage these tools to gain a competitive edge, optimize operations, and anticipate future challenges. The continued evolution of predictive modeling, driven by advancements in AI and big data, promises even more impactful applications in the years to come.

Predictive modeling tools are increasingly vital for real-time insights, but the choice of infrastructure significantly impacts their effectiveness. The decision often hinges on the trade-offs between processing data locally, as with Edge computing vs cloud computing , or leveraging the scalability of the cloud. Ultimately, the best approach for predictive modeling depends on factors like latency requirements and data volume.

Predictive modeling tools are increasingly reliant on robust infrastructure to handle the vast datasets required for accurate predictions. This often necessitates leveraging the scalability and resilience offered by Multi-cloud strategies , which allow for efficient resource allocation and redundancy. Ultimately, the choice of infrastructure directly impacts the performance and reliability of these crucial predictive modeling tools.