Containerization and Kubernetes represent a paradigm shift in application deployment and management. This powerful combination allows developers to package applications and their dependencies into isolated containers, orchestrating their deployment and scaling across clusters of machines with unparalleled efficiency and flexibility. This guide delves into the core concepts, benefits, and practical applications of this transformative technology, providing a clear understanding of its intricacies and potential.

We’ll explore the fundamentals of containerization, comparing it to traditional virtual machines and highlighting its advantages in terms of resource utilization, portability, and scalability. Then, we’ll dive into the world of Kubernetes, examining its architecture, key components, and deployment strategies. We’ll cover essential aspects such as networking, storage, security, and monitoring, equipping you with the knowledge to effectively leverage this powerful orchestration platform.

Introduction to Containerization: Containerization And Kubernetes

Containerization is a revolutionary approach to software deployment that packages an application and its dependencies into a single unit, called a container. This allows the application to run consistently across different computing environments, regardless of underlying infrastructure. Think of it as a lightweight virtual machine, but significantly more efficient and portable.

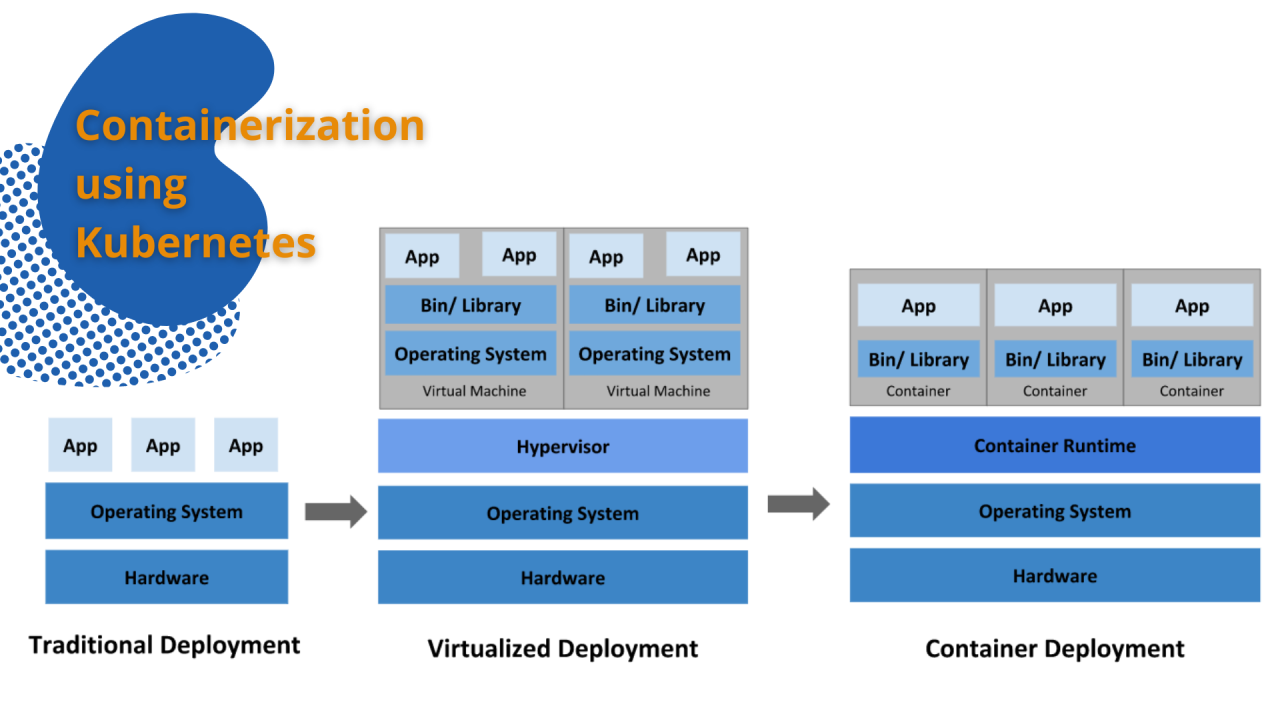

Containerization offers several key advantages over traditional virtual machines (VMs). Unlike VMs, which virtualize the entire operating system, containers share the host operating system’s kernel, resulting in significantly reduced overhead. This translates to faster startup times, higher density of applications per host, and more efficient resource utilization.

Core Concepts of Containerization

Containerization relies on several fundamental concepts. The core component is the container image, a read-only template containing the application’s code, runtime, system tools, system libraries, and settings. This image is used to create containers, which are the actual running instances of the application. Containers are isolated from each other and the host system, ensuring that applications run reliably and securely. A container registry serves as a repository for storing and managing container images, allowing for easy sharing and distribution. Finally, container orchestration tools, such as Kubernetes (discussed later), manage and automate the deployment, scaling, and networking of containers.

Benefits of Containers over Virtual Machines

Containers offer several key advantages over VMs. Their smaller size and shared kernel lead to significantly improved resource efficiency. This allows for more applications to run on a single host, reducing infrastructure costs. Containers also boast faster startup and shutdown times, making them ideal for microservices architectures and dynamic environments. Their portability ensures consistent operation across different platforms, from development to production. Finally, the lightweight nature of containers simplifies deployment and management, streamlining the software development lifecycle.

Common Containerization Technologies, Containerization and Kubernetes

Several technologies power containerization. Docker is the most widely adopted containerization platform, providing tools for building, running, and managing containers. Containerd and runC are lower-level components often used by Docker and other container runtimes, handling the actual container execution. These technologies work together to create a robust and efficient container ecosystem. Podman is another popular container engine that offers a daemonless architecture, improving security and simplifying management.

Example of a Simple Containerized Application Architecture

Consider a simple e-commerce application. This application could be broken down into several microservices: a product catalog service, an order processing service, and a payment gateway service. Each microservice would be containerized, with its own Docker image. These containers would then be deployed to a Kubernetes cluster, managed and scaled automatically. This architecture allows for independent scaling of each service based on demand, ensuring high availability and performance. The deployment pipeline would involve building the Docker images, pushing them to a container registry, and deploying them to the Kubernetes cluster using a tool like Helm.

Mastering containerization and Kubernetes unlocks a new level of efficiency and scalability for application deployment. By understanding the core concepts, leveraging best practices, and effectively utilizing the tools and techniques discussed, organizations can streamline their workflows, enhance application resilience, and achieve significant cost savings. This comprehensive guide serves as a foundational resource, empowering you to navigate the complexities of this dynamic landscape and harness the full potential of modern application deployment.

Containerization, using technologies like Docker, and orchestration with Kubernetes, are revolutionizing application deployment. These technologies are integral to many of the Cloud Computing Trends Shaping the Future , offering scalability and efficiency. Understanding containerization and Kubernetes is thus crucial for anyone navigating the evolving landscape of modern cloud infrastructure.

Containerization, using technologies like Docker, and orchestration platforms such as Kubernetes, are transforming application deployment. Understanding where these fit within the broader cloud landscape is crucial, and a helpful resource for this is a comprehensive overview comparing IaaS, PaaS, and SaaS: Comparison of IaaS PaaS SaaS A Comprehensive Overview. This understanding allows for informed decisions on how best to leverage containerization and Kubernetes for optimal scalability and efficiency.