Cloud performance monitoring is crucial for ensuring the smooth operation and optimal performance of cloud-based applications. Understanding key metrics, implementing effective monitoring tools, and analyzing performance data are essential for identifying bottlenecks, optimizing resource utilization, and maintaining high availability. This guide explores various aspects of cloud performance monitoring, from setting up systems to optimizing costs and addressing security concerns.

We will delve into the core components of cloud performance monitoring, examining different approaches and tools available. We’ll cover practical implementation strategies, including selecting appropriate tools based on application architecture and scale, and offer insights into interpreting key performance indicators (KPIs) to diagnose and resolve performance issues. Furthermore, we will discuss the crucial role of alerting and notifications, cost optimization strategies, and security best practices within the context of cloud monitoring.

Types of Cloud Performance Monitoring Tools

Effective cloud performance monitoring is crucial for maintaining application uptime, optimizing resource utilization, and ensuring a positive user experience. The right tools can provide the insights needed to proactively identify and resolve performance bottlenecks, ultimately saving time and money. Choosing the appropriate tool depends heavily on your specific needs and the complexity of your cloud infrastructure.

Cloud performance monitoring tools are broadly categorized by their functionality. These categories often overlap, with some tools offering features across multiple areas. Understanding these distinctions is key to selecting the most suitable solution for your organization.

Effective cloud performance monitoring is crucial for maintaining application uptime and user experience. Understanding the underlying infrastructure is key, and a helpful resource for this is the Comparison of IaaS PaaS SaaS A Comprehensive Overview , which clarifies the distinctions between IaaS, PaaS, and SaaS deployment models. This knowledge allows for more targeted monitoring strategies, ensuring optimal performance across your chosen cloud environment.

Application Performance Monitoring (APM) Tools

APM tools focus on the performance of applications running in the cloud. They provide detailed insights into application code, transaction traces, and user experience. This allows developers to pinpoint performance issues within their applications, rather than just observing overall infrastructure problems. Examples of popular APM tools include Datadog, New Relic, and Dynatrace. These tools typically use techniques like distributed tracing and code-level instrumentation to track the flow of requests through the application, identifying slowdowns or errors.

Infrastructure Monitoring Tools

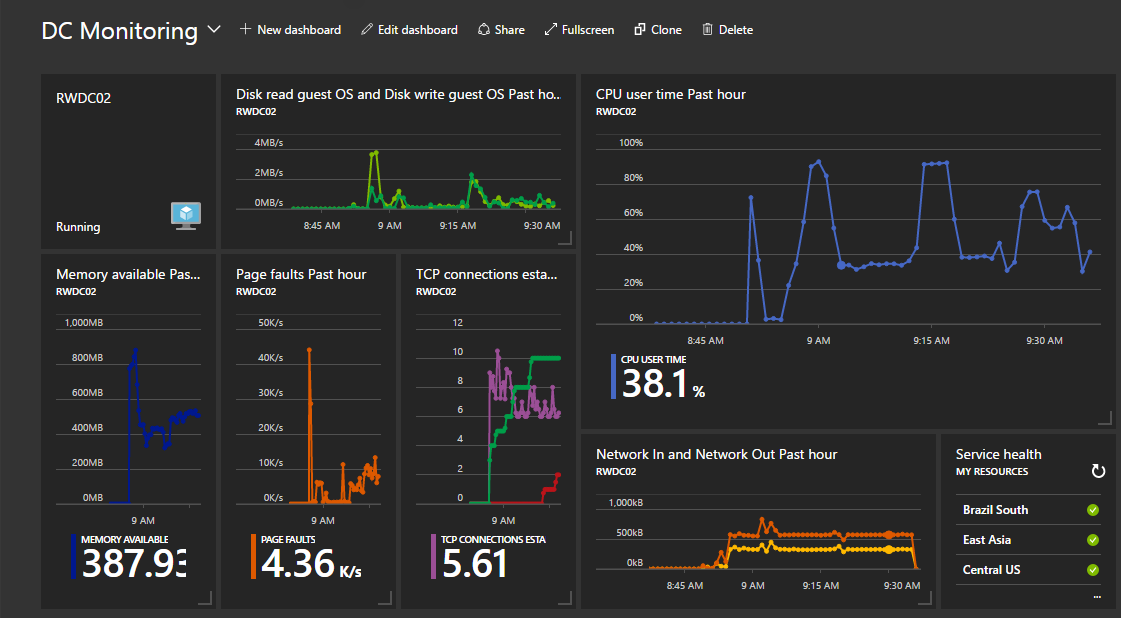

Infrastructure monitoring tools focus on the health and performance of the underlying cloud infrastructure, including servers, networks, and databases. They provide metrics such as CPU utilization, memory usage, network latency, and disk I/O. This data helps identify resource constraints and potential infrastructure bottlenecks. Examples include CloudWatch (AWS), Azure Monitor (Microsoft Azure), and Google Cloud Monitoring (Google Cloud Platform). These tools often integrate with other cloud services to provide a comprehensive view of infrastructure performance.

Log Management Tools

Log management tools collect, analyze, and store logs from various sources within the cloud environment. They enable efficient searching, filtering, and analysis of logs to identify errors, security threats, and performance issues. This allows for proactive identification of problems before they impact users. Examples include Splunk, ELK Stack (Elasticsearch, Logstash, Kibana), and Graylog. These tools often provide dashboards and visualizations to simplify the analysis of large volumes of log data.

Comparison of Cloud Performance Monitoring Tools

The following table compares four popular cloud performance monitoring tools across various aspects, including pricing and key features. Note that pricing can vary greatly depending on usage and specific features selected.

Effective cloud performance monitoring is crucial for maintaining application uptime and user satisfaction. Understanding key metrics is paramount, and this becomes even more critical as we consider the rapidly evolving landscape described in this insightful article on Cloud Computing Trends Shaping the Future. By proactively addressing performance bottlenecks highlighted by monitoring tools, organizations can leverage the full potential of cloud services and avoid costly downtime.

| Tool | Pricing Model | Key Features | Strengths |

|---|---|---|---|

| Datadog | Subscription-based, usage-based pricing | APM, infrastructure monitoring, log management, security monitoring, real user monitoring | Comprehensive platform, strong integrations, excellent visualization |

| New Relic | Subscription-based, usage-based pricing | APM, infrastructure monitoring, digital experience monitoring, application security | User-friendly interface, robust APM capabilities, good for troubleshooting application issues |

| Dynatrace | Subscription-based, usage-based pricing | APM, infrastructure monitoring, digital experience monitoring, AI-powered anomaly detection | Automated root cause analysis, strong AI capabilities, reduces manual troubleshooting effort |

| Prometheus | Open-source, self-hosted | Infrastructure monitoring, metrics collection, alerting | Highly scalable, flexible, cost-effective for smaller deployments, large community support |

Analyzing Cloud Performance Data

Analyzing cloud performance data is crucial for maintaining application availability, responsiveness, and cost-effectiveness. Effective analysis involves identifying performance bottlenecks, interpreting key performance indicators (KPIs), and using this information to optimize cloud infrastructure and application deployments. This process allows for proactive problem-solving, preventing performance degradation before it impacts users.

Understanding the root causes of performance issues requires a systematic approach. This involves examining various aspects of the cloud environment, from network latency and database response times to application code efficiency and resource allocation. By correlating data from different sources, a comprehensive picture of performance can be built.

Common Performance Bottlenecks and Root Causes

Performance bottlenecks in cloud environments can stem from various sources. Identifying these requires careful examination of resource utilization, network performance, and application behavior. Common bottlenecks include insufficient compute resources (CPU, memory), slow database queries, network latency, inefficient application code, and inadequate storage I/O.

- Insufficient Compute Resources: High CPU utilization or memory exhaustion can lead to slow application response times and increased error rates. This is often caused by poorly optimized code, resource-intensive processes, or unexpected traffic spikes.

- Slow Database Queries: Inefficient database queries or a poorly designed database schema can severely impact application performance. This can manifest as slow load times or unresponsive interfaces.

- Network Latency: High network latency, resulting from network congestion or geographical distance between components, directly impacts application responsiveness. This is particularly relevant for applications with distributed components or users located far from the cloud infrastructure.

- Inefficient Application Code: Poorly written or unoptimized application code can consume excessive resources and slow down processing. This can be identified through profiling and performance testing.

- Inadequate Storage I/O: Slow storage read/write operations, due to insufficient storage capacity or slow storage devices, can significantly impact application performance, particularly for applications with large datasets or frequent file access.

Interpreting Key Performance Indicators (KPIs), Cloud performance monitoring

Key Performance Indicators (KPIs) provide quantitative measures of cloud performance. By monitoring and analyzing these KPIs, administrators can identify potential problems and their severity. Common KPIs include CPU utilization, memory usage, network latency, disk I/O, database query response times, and application error rates.

For example, consistently high CPU utilization (above 80%) could indicate the need for more powerful virtual machines or optimization of application code. Similarly, slow database query response times could point to the need for database tuning, schema optimization, or improved indexing. High network latency could suggest network infrastructure upgrades or optimization of application architecture. A sudden increase in application error rates might signal a bug in the application code or a problem with a dependent service. By analyzing trends in these KPIs, potential problems can be identified before they lead to major performance issues.

Example Scenario: Diagnosing a Slow Website

Imagine a website experiencing slow load times. By monitoring KPIs, we might observe high CPU utilization on the web server, slow database query times, and increased network latency. This suggests a combination of factors contributing to the performance problem. Further investigation might reveal that the database is undersized for the current traffic load, the application code is inefficient, and the network connection between the web server and the database is congested. Addressing these issues, such as upgrading the database, optimizing the application code, and improving the network connection, would likely resolve the slow load times.

Cost Optimization through Monitoring: Cloud Performance Monitoring

Effective cloud performance monitoring is not just about ensuring application uptime; it’s a crucial tool for optimizing cloud spending. By leveraging the data generated through monitoring, organizations can identify areas of inefficiency and implement strategies to significantly reduce their cloud bills without compromising performance. This involves a proactive approach to resource management, focusing on right-sizing instances and eliminating unnecessary costs.

Understanding and analyzing performance metrics provides the foundation for informed decisions regarding resource allocation. Identifying underutilized resources, inefficient processes, and instances running at far below their capacity allows for targeted cost reduction strategies. This data-driven approach contrasts sharply with a reactive model, where cost optimization is only considered after experiencing unexpectedly high bills.

Identifying and Eliminating Wasteful Resource Utilization

Analyzing cloud performance data reveals opportunities to eliminate wasteful resource utilization. This often involves identifying resources that are consistently underutilized or inactive. For example, a database server operating at only 10% capacity is a clear candidate for downsizing or right-sizing. Similarly, development or testing environments left running continuously outside of active use periods represent significant unnecessary expense. Identifying these underutilized resources requires regularly reviewing CPU utilization, memory consumption, storage usage, and network traffic. Tools that visualize resource consumption over time, such as those offered by cloud providers, are invaluable in this process.

Strategies for Right-Sizing Instances

Right-sizing instances involves adjusting the compute capacity of virtual machines to match actual workload demands. Over-provisioning, where instances are allocated more resources than necessary, is a common source of wasted expenditure. Conversely, under-provisioning can lead to performance bottlenecks and application slowdowns. Performance monitoring data, such as CPU utilization and memory usage, provides the necessary insights to determine the optimal instance size. For instance, if a web server consistently shows CPU utilization below 40% over a sustained period, a smaller instance type could be sufficient, resulting in cost savings. Regularly reviewing and adjusting instance sizes based on observed performance metrics is crucial for long-term cost optimization.

Automating Cost Optimization

Automation plays a vital role in continuously optimizing cloud spending. Cloud providers offer various services and tools that automate resource scaling based on real-time performance data. For example, auto-scaling features automatically adjust the number of instances running in response to changes in demand, ensuring that resources are only consumed when needed. Similarly, tools for automated instance right-sizing can dynamically adjust instance types based on performance metrics, further reducing costs. Implementing these automation features can significantly reduce the manual effort required for cost optimization, ensuring continuous efficiency and cost savings.

Scalability and Performance of Monitoring Systems

Cloud performance monitoring systems face significant challenges as the scale and complexity of cloud environments grow. The sheer volume of data generated by applications, infrastructure, and users necessitates robust and efficient solutions to ensure that monitoring remains effective and insightful, not a bottleneck itself. Failing to address scalability issues can lead to incomplete data, delayed alerts, and ultimately, impaired ability to maintain application performance and user experience.

The primary challenge lies in processing and storing the massive amounts of telemetry data generated by modern cloud environments. This data includes metrics, logs, and traces, all of which require efficient ingestion, processing, and storage mechanisms. As the number of monitored resources and the frequency of data collection increase, the system’s capacity must scale proportionally to avoid performance degradation. Furthermore, the need for real-time or near real-time analysis adds another layer of complexity, demanding highly optimized data pipelines and query engines.

Data Ingestion and Processing Techniques

Efficient data ingestion is crucial for maintaining system performance. Techniques such as batch processing, stream processing, and message queues are commonly employed to handle high-volume data streams. Batch processing excels at handling large volumes of historical data, while stream processing enables real-time analysis of incoming data. Message queues provide a buffer between data sources and processing units, ensuring that no data is lost even during periods of high load. For example, a system might use Kafka as a message queue to handle incoming metrics, then employ Apache Flink or Spark Streaming for real-time processing, and finally, store processed data in a time-series database like InfluxDB or Prometheus.

Data Storage and Retrieval Optimization

Storing and retrieving large volumes of monitoring data efficiently is another critical aspect of scalability. Time-series databases, specifically designed for high-volume, time-stamped data, are well-suited for this purpose. These databases employ specialized indexing and query optimization techniques to ensure fast retrieval of relevant data. Furthermore, techniques like data partitioning, sharding, and data compression can significantly improve storage efficiency and query performance. For instance, a system might partition data based on geographical location or application, allowing for faster querying of specific subsets of the data. Employing compression algorithms like Snappy or LZ4 can reduce storage space requirements and improve data transfer speeds.

Alerting and Notification System Optimization

The alerting system, responsible for notifying administrators of critical events, needs to scale effectively without generating excessive false positives or failing to detect actual problems. This often requires sophisticated filtering and aggregation mechanisms to reduce the volume of alerts. Employing machine learning algorithms to identify anomalies and predict potential issues can further refine alerting processes. For instance, an anomaly detection system might use statistical methods to identify unusual patterns in metrics, reducing the number of irrelevant alerts while still highlighting critical issues promptly. Implementing intelligent routing and notification mechanisms ensures alerts reach the right people in a timely manner, regardless of the volume of events.

Effective cloud performance monitoring is not merely a technical exercise; it’s a strategic imperative for businesses relying on cloud infrastructure. By proactively monitoring performance, identifying bottlenecks, and optimizing resource allocation, organizations can significantly enhance application availability, reduce operational costs, and ensure a superior user experience. The insights gained from robust monitoring practices empower informed decision-making, leading to improved efficiency and a competitive advantage in the dynamic cloud landscape. This guide provides a foundation for building a comprehensive and effective cloud performance monitoring strategy.